Last Updated on 3 months ago

I. Introduction: The AI Boom and Its Physical Footprint

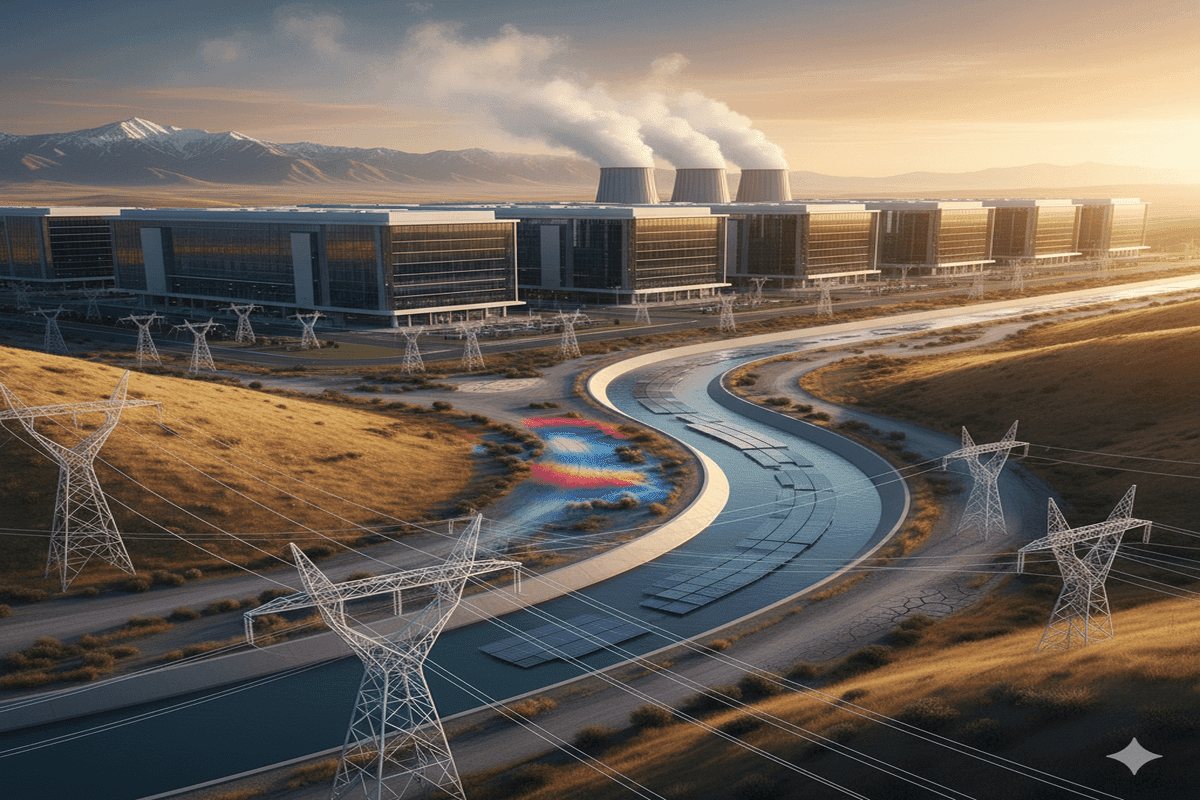

The artificial intelligence revolution has arrived with unprecedented speed. From ChatGPT’s explosive growth to Google’s Gemini and countless other AI applications, these powerful tools have become integral to our daily lives. Yet while we interact with AI through sleek interfaces and seamless conversations, there’s an invisible infrastructure working behind the scenes—one that carries a surprisingly heavy physical footprint.

This invisible technology demands very real and significant resources, particularly when it comes to energy consumption and water usage. As California positions itself as the epicenter of AI innovation, the state faces mounting pressure on its electrical grid and water supply. The rapid proliferation of AI data centers across the Golden State is creating unprecedented demands on infrastructure that was never designed to handle such intensive computational workloads.

This post explores how the exponential growth of AI data centers in California is creating new challenges for energy management and water conservation, and what solutions are emerging to address these critical sustainability concerns.

II. The Thirst for Power: AI’s Energy Demands

The Problem

AI models require extraordinary amounts of electricity for both training and inference—the process of generating responses to user queries. Unlike traditional computing tasks, AI operations involve massive parallel processing across thousands of specialized chips, creating energy demands that dwarf conventional data center operations.

Concrete Examples

The scale of AI’s energy appetite is staggering. AI needs a lot of electricity and water to stay cool in data centers, with each ChatGPT query consuming significantly more energy than a simple Google search. While a standard web search might use about 0.3 watt-hours of energy, a single AI query can consume 2.9 watt-hours—nearly ten times more power for each interaction.

The cumulative impact is enormous. Training a large language model like GPT-4 can consume as much electricity as 300 American homes use in an entire year. When millions of users interact with these systems daily, the energy requirements multiply exponentially.

The California Context

Data centers currently consume approximately 5,580 gigawatt-hours (GWh) per year of electricity in California, or about 2.6% of California’s 2023 electricity demand, according to the California Energy Commission. This substantial energy draw is putting additional strain on California’s power grid, particularly during peak demand periods and extreme weather events.

The challenge is compounded by California’s ambitious renewable energy goals and the need to maintain grid stability. As AI adoption accelerates, the state must rapidly expand its infrastructure capacity while simultaneously transitioning to cleaner energy sources. This creates potential conflicts between sustainability objectives and the immediate power needs of the AI economy.

III. The Need for Water: Cooling the Computers

The Science

Data centers generate enormous amounts of heat from their high-performance computing operations. To prevent catastrophic overheating that could damage expensive equipment, these facilities require sophisticated cooling systems that rely heavily on water. The cooling process is absolutely critical—servers operating at full capacity can reach temperatures that would cause permanent damage within minutes without proper thermal management.

Most traditional data centers use water-based cooling systems that work similarly to a giant air conditioning unit. Water absorbs heat from the servers, then evaporates or is cycled through cooling towers where the heat dissipates into the atmosphere. This process requires a constant supply of fresh water to replace what’s lost through evaporation.

The Problem in a Drought-Prone State

California’s chronic water scarcity makes AI’s cooling demands particularly problematic. The state frequently experiences drought conditions, with water restrictions affecting agriculture, urban development, and industrial operations. Adding massive data centers to this equation creates additional pressure on already strained water resources.

In the US, an average 100-megawatt data center, which uses more power than 75,000 homes combined, also consumes about 2 million liters of water per day, according to an April report on energy and AI from the International Energy Agency (IEA). This consumption level is equivalent to the water needs of a small town—and it’s often drawn from the same freshwater supplies that communities depend on for drinking, agriculture, and other essential needs.

The water usage becomes even more concerning when considering that many of these data centers are located in regions already experiencing water stress. Unlike agricultural or municipal uses, data center water consumption doesn’t return treated water to local systems—much of it is lost forever through evaporation.

IV. Solutions and the Path Forward

Technological Innovations

The technology sector is rapidly developing more efficient cooling solutions to address these environmental challenges. Advanced cooling technologies like direct-to-chip cooling and liquid immersion cooling are showing remarkable promise. These systems can reduce water consumption by up to 90% compared to traditional cooling methods by delivering coolant directly to heat sources rather than cooling entire facility spaces.

Liquid immersion cooling, where servers are submerged in non-conductive fluids, represents one of the most promising advances. This approach not only dramatically reduces water usage but also improves energy efficiency by enabling more precise temperature control. Companies like Microsoft and Google are already piloting these technologies in their newest facilities.

Policy and Regulation

California legislators are taking action to address data center resource consumption through targeted legislation. SB 58 would provide a tax credit to data centers utilizing at least 70% carbon-free energy, at least 50% of the energy supply from behind-the-meter sources, does not use diesel fuel, and utilizes recycled water cooling within five years of the certification effective date.

Three state lawmakers introduced bills this week aimed at curbing the amounts of electricity and water used to power artificial intelligence and data processing centers amid renewed scrutiny over the state’s water management. These legislative initiatives focus on transparency requirements, efficiency standards, and incentives for sustainable practices.

The regulatory approach includes mandatory reporting of energy and water usage, efficiency benchmarks that data centers must meet, and financial incentives for adopting green technologies. These policies aim to balance the economic benefits of AI development with environmental stewardship.

Company Initiatives

Leading technology companies are investing heavily in sustainable data center operations. Many are committing to 100% renewable energy for their facilities, implementing advanced cooling technologies, and exploring alternative water sources like recycled wastewater. Some companies are even experimenting with locating data centers in naturally cold climates to reduce cooling demands.

Major cloud providers are also developing more efficient AI chips and optimizing software to reduce computational requirements. These improvements can significantly decrease the energy and water needed to deliver AI services while maintaining performance quality.

V. Conclusion: Balancing Innovation and Sustainability

The massive energy and water demands of AI data centers represent one of the most significant environmental challenges of the digital age. As California continues to lead AI innovation, the state faces the complex task of supporting technological advancement while protecting its precious natural resources.

While AI offers transformative benefits for society—from advancing medical research to improving climate modeling—we cannot ignore its environmental costs. The good news is that rapid progress in cooling technology, renewable energy integration, and policy frameworks provides a pathway toward sustainable AI infrastructure.

Success will require unprecedented collaboration between technology companies, policymakers, environmental advocates, and the public. By working together to implement innovative solutions, establish effective regulations, and prioritize sustainability, California can maintain its position as an AI leader while protecting the environment for future generations.

The choices made today about AI infrastructure will shape both technological progress and environmental outcomes for decades to come. With thoughtful planning and commitment to sustainability, it’s possible to harness AI’s potential while preserving California’s natural resources for future generations.

Relevant Resources

Services

- AI Development Company – Naveck

- Software Development Company – Naveck

- Blockchain Development Company – Naveck

- Mobile App Development Company – Naveck

- Web Development Company – Naveck

- Web Design Company – Naveck

Related Articles

- AI Innovation California: Top Development Talent

- Top AI Tools for Software Development Teams

- How AI is Revolutionizing Agile Software Development

- How AI Agents are Reshaping Software Development

- How AI Pair Programming is Transforming Development Teams

- Best AI Business Ideas for Startups

- Accelerating AI Adoption: Strategic Development Partnerships

- Ways Question Prompts LLMs: Low Competition Marketing Opportunities

External Resources

- California Energy Commission Data Center Energy Usage Reports

- International Energy Agency – Energy and AI Report

- California State Water Resources Control Board

- Environmental and Energy Study Institute – Data Centers and Water Consumption

- Data Center Knowledge – Industry News and Trends

- California Legislative Information

- U.S. Department of Energy – Data Center Best Practices